GeoComputation: the next 20 years (Andy Evans)

Author: Andy Evans.

In many respects, GeoComputation is a field whose time has come. If we take GeoComputation to be "The Art and Science of Solving Complex Spatial Problems with Computers"1, GeoComputation is now the go-to area for both professional analysts and the public. 20 years ago, in 1996, when the field was launched with the first international GeoComputation conference in Leeds, UK, it was the passion of a few excited coders and geographers. Now, the analysis, visualisation, and use of spatial data is everywhere. It is therefore a great time to be reflecting on what the future holds for "GeoComp" – both as an academic subject, and more broadly within society. With this in mind, the community has been holding a number of events looking forward to the next 20 years in the runup to the next conference when the series will return to Leeds for a celebration and reflection.

It seems clear, at least at the moment, that the next 20 years will be shaped by the growth of data. After years of bemoaning the lack of data for analysis and policy making, we now have vast amounts of information, albeit of varying quality and coverage, with which to understand the world and society. However, it seems worth reflecting on the nature of that data and how that nature will affect our community. With that data expansion we are also seeing a shift towards information different in two ways: the first difference is a shift towards immediacy as a time-frame, and the second is a shift to the individual as a unit of information. These changes bring some key opportunities, but also some key responsibilities for the GeoComp community.

These two changes to data are already playing out in people's lives, in interlinked ways. This is particularly clear in the growing field of what we might call "bespoke individual policies". For example, we have already seen the growth of car insurers offering reductions in premiums based on voluntary GPS tracking, along with healthcare insurers setting lower fees based on fitness-tracker activity profiles. Although such policies are generally not most obviously enacted by governments, they are frequently supported by governments as enacting worthwhile social adjustments (for example, by allowing flexibility in insurance regulations). This growth of quick-response, individual-level 'opportunities' is a more targeted, and therefore one might argue, more engaging system than aggregate policy making, and one that would seem increasingly popular in a neo-liberal capitalist milieu that is centred on personal responsibility and opportunity. And, infact, closer examination of government areas like the tracking and support of individual school pupils and the tackling of long-term unemployment shows that often the approach to target achievement by government departments is (at least nominally) to disaggregate support and tracking to the individual level. In part this is because it is generally the right location for support, but also to somewhat devolve failings to individuals not aggregate policy; in UK press rhetoric at least, the discussion is not about investment in areas, but in the failings of "problem families" and "skivers" – though in reality the area-based approach to investment continues in parallel. Finally, we have also seen the growth of algorithmic management in the so-called "gig economy"2; zero-hour contracts and microworkers controlled at an individual level by algorithmic work policies. There are a great many tricky questions such a movement brings: can policies be automated, and to what time scale? What does it mean to vote for a long-term government, when policies are individual, bespoke, short-term, and commercially applied? These are questions to which a community with expert computational knowledge and a strong social understanding is well placed to contribute.

In many senses, GeoComputation should be keen to embrace the data changes at least. For years, we have struggled with two major statistical issues. The first is the Ecological Fallacy: the problem that we have previously needed to build policy around areas, not people, and that by doing so we have to assume that everyone in an area is represented by some prototypical description of what people in that area are like – if you live in an area where your neighbours all read a right-wing broadsheet newspaper, you too will, needs must, be treated like a right-wing broadsheet reader. The second is the Modifiable Areal Unit Problem (the "MAUP"): that aggregated statistics like averages change depending on where you draw area boundaries, as different groups of people are included in each area once drawn. Individual-level policy application and analysis seem like positive moves against these issues and ones we should embrace. In addition, individual level data brings greater nuance and understanding of lived experience, filling in the gaps of detail and coverage so long bemoaned in aggregate and yearly datasets. As such, it should contribute to better aggregate analysis and policy making as well as more individual-level applications. This doesn't just apply to human geography: whether it is understanding people's daily routines, or the territory of individual animals, or the way a rock moves within bedload, the shift to individual and instant data promises to help us understand the world and better contribute to solving the problems we see in it.

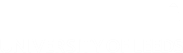

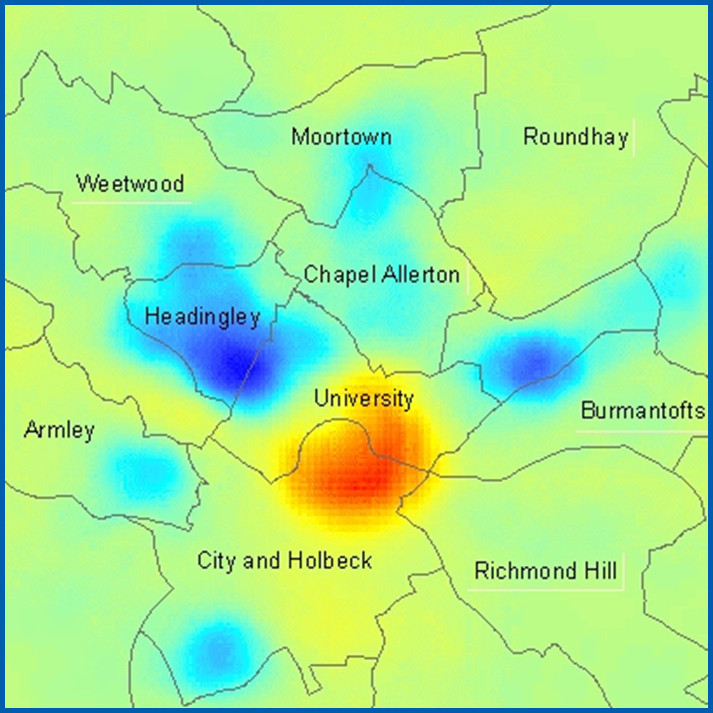

Census and daytime populations at risk from crime3

In that sense, one of the key improvements GeoComputation needs to make in the next 20 years is not only improved spatio-temporal pattern finding (what is the relationship between aggregates of things over time in space), but also how smaller elements fit into those larger patterns. Complex systems analysis has taught us that small changes at the individual level across a population can have big effects at the system level, but we have yet to develop the tools we need to track how individual-level behaviours induce 'emergent' large-scale patterns, and link those scales through time (tracking both forwards and backwards). Moreover, we need better tools for doing this in dynamic systems that are evolving before our eyes: we need methods and models that utilise the instantaneous reporting of many modern datasets, producing the kinds of short-term, high-quality, local analyses and predictions that could feed into more agile, responsive, (and potentially automated) policy-making; whether that be in responding to floods, local resource needs, or ecological protection.

However, I don't think I'm alone in feeling ethically 'queasy' about this area, and it seems clear that the GeoComputational community has a shared responsibility to make sure that individual-level data is used to enhance individual freedoms while also supporting the development of a fair, supportive, and sustainable society more broadly – a balance that is surely a key issue in modern, if not most, civilisations. As the people developing and managing the foundations of many of these implementations, we need to be the group that points out the pitfalls of these techniques and keeps them from being tools of suppression and dissimulation.

Traditionally, one of the dangers of the data-driven treatment of people is that we lose sight of individuals as human beings. To choose but two examples, Aly Gotz and Susanne Helm have shown the place of geographers and quantitative social scientists in the construction of the Holocaust, in part as a solution to population and land management in 1930s Germany4; while Geoffrey Bowker and Susan Star have shown the place of census statisticians in the apartheid system5. Nevertheless, I think most GeoComputational people are aware of this trend in the more everyday as well. Indeed, in some respects one of the potential positives of the shift to the individual data subject is that it responds to the demands of the "cultural turn" in geography during the 1970s and 80s, that geography needs to consider individual lived experience more. Nevertheless, it should be clear that simply concentrating on the individual does not relieve us of the potential that we might treat people as mere numbers, albeit smaller ones.

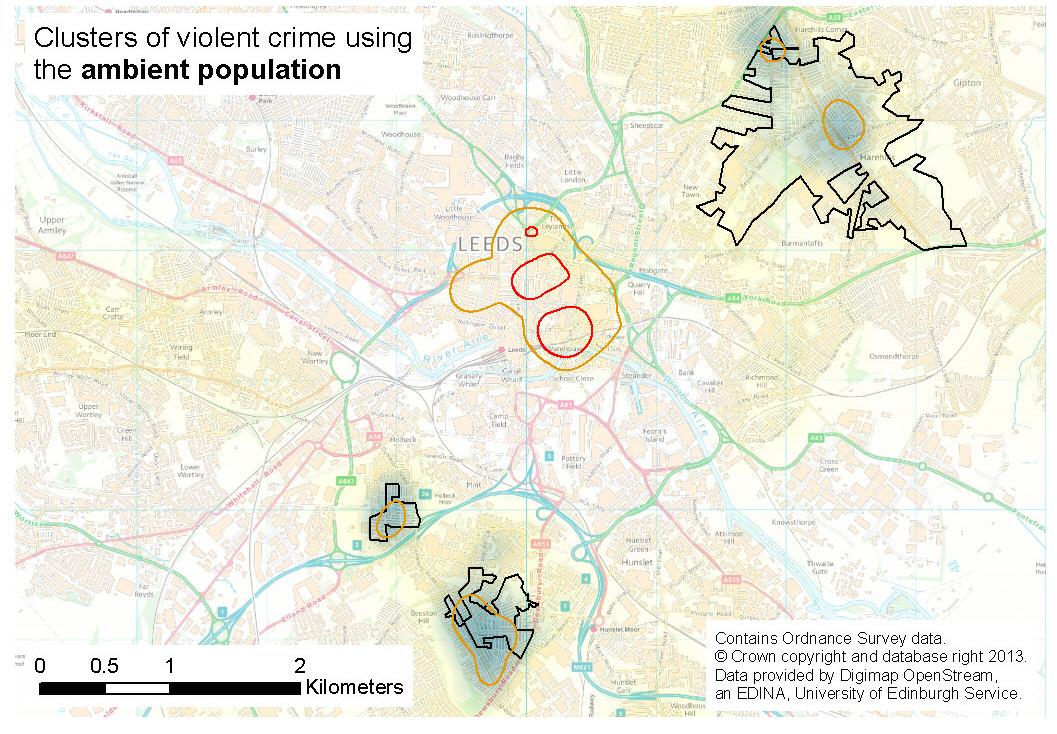

A positive move in this respect, and one GeoComputation needs to highlight more, is what one might call the "cognitive movement" in quantitative geography. While much work in the field does treat individuals as numbers, there is also an increased interest in quantitatively capturing how people understand and interact with the world in a more nuanced fashion. The popular methodology of Agent-Based Modelling is generally constructed around a cognitive framework that encompasses Beliefs, Desires, and Intentions (plans). Equally, the vernacular and crowd-sourced understanding of the world is increasingly an important data area; with this understanding being extracted from social media and folksonomy (tag-based) datasets. This shift from "real" data to capturing and modelling beliefs about the world should let us concentrate on what people understand about objects and relationships in the world (what we might call a "doxastic geography"), and how they use those beliefs. This seems to me to be a key area of potential over the next 20 years, especially when it comes to GeoComputation contributing to issues of cross-cultural understanding, and the resolution of conflicts in resource use.

Percieved high crime areas vs real crime statistics. Users spraycanned a map with their beliefs about where crime was high and this was then compared with real stats: red areas are where average perceptions of crime were higher, in a very rough sense, than expected from average crime statistics.6

More immediately, the GeoComp community also has a responsibility to see that new work is completed without repeating the mistakes of the past. Although it is clear that there is a growing use of data on us by companies, we have come a long way from the genuine era of the "data elite", where a few select organisations had control over all data and it was impossible for anyone else to engage with information to argue their case. Increasingly the arguments over the way society manages the world are conducted not just including data, but with data as a medium and arena. When everyone has access to the tools we have taken an important role in developing, we have a responsibility to highlight what we have learnt about methodological issues and make sure the tools are used well, walking that delicate refereeing balance between highlighting some quite complex statistical issues (MAUP, Spatial Autocorrelation; the Ecological Fallacy) while not acting as a pathway for suppressing debate. We also, as ever, need to continue to provide rigor to analyses, highlighting error and confidence limits. One key issue for the next 20 years is not only how we track emergence through dynamic systems, but also how we track error and confidence in specific evolving system components.

We also have a responsibility, in an increasingly immediate world centred on the individual, to point out three key issues: the first, that we have a history; that is, that the paths we have taken are complex, complicated, and fraught with difficulties, so we understand better the problems ahead of us, and not least because reflecting on one's history is a key part of personal growth. Secondly, we need to open up the spaces where data can contribute to people's wellbeing: we need to resist the temptation to concentrate on those datasets collected for and by companies and the government, and their associated uses, and show that data can contribute to improving people's lives in areas beyond commercial and social management: in areas of leisure and pleasure; art and understanding; environment and health. Finally we need to fight to maintain some sense of society in a milieu that is increasingly individual-centred, despite economists and policy analysts increasingly crying the failure of purely market-centred approaches. We need to think through what duty people have to contribute data to the positive management of society while also ensuring that a world in which control is increasingly hell-bent on the individual-level responds fairly and agilely to people's needs. This will involve avoiding the pitfall of assuming people never change; giving them the breathing space and anonymity necessary for personal growth and avoiding undue harassment; and embedding them within the aggregate responsibilities (and joys) we all have to support and create a better society. One of the many potential things lost in a concentration on the individual is the sense of duty and altruism that is key to running a fair, supportive, and sustainable society, and if all our concentration is on individuals, we risk losing sight of that to the detriment of current and future societies.

For me, the GeoComputational community is well placed to contribute to these debates and build the tools necessary to negotiate these complicated issues. We have the computational background to build the numeric tools, but the humanistic background to understand how those tools affect people's lives; we're excited by the potential for data, but equally understand that data is neither perfect nor a complete representation; and we understand both the social issues at the heart of worthwhile data use, and also the statistical and application issues. It is easy to drop into partisan thinking on the data issue: either one is against individual-level instant data as encouraging "Big Brother", or one is for it as an opportunity for the invisible hand of capitalism. I think, however, there is a middle ground; one where data supports the better understanding of society and the environment and fills many of the gaps in our previous knowledge, with uses that concentrate on solving key social and environmental issues. As it happens, this has generally been the space that the GeoComputational community has carved out for itself whatever the data, and I think this bodes well for the liveliness and relevancy of the community going forward. It is, in short, my hope that one major contribution the GeoComputational community can make over the next 20 years as data becomes more and more key to the way we live our lives, is to build and oversee the development of tools for a better, more supportive, and kinder society, a society that knows when data is important, and when it is not.

Andy Evans, Co-Secretary, International GeoComputation Conference steering group.

Notes

1 As suggested back in the day by some fresh-faced tagline writer for the GeoComputation conference series website.

2 Sarah O'Connor (2016) When your boss is an algorithm, Financial Times Online, 8th Sept 2016.

3 Malleson N; Andresen MA (2015) The impact of using social media data in crime rate calculations: shifting hot spots and changing spatial patterns, Cartography and Geographic Information Science, 42.

4 Aly Gotz and Susanne Helm (2002) Architects of Annihilation: Auschwitz and the Logic of Destruction London: Weidenfeld & Nicolson. This

substantial review by Jeremy Noakes is worth reading.

5 Geoffrey C. Bowker and Susan Leigh Star (1999) Sorting Things Out:

Classification and Its Consequences MIT Press.

6 Evans, A.J. and Waters, T. (2007) Mapping Vernacular Geography: Web-based GIS Tools for Capturing "Fuzzy" or "Vague" Entities, International Journal of Technology, Policy and Management, 7, (2) 134-150.